EnhanceIO and Check_MK plugin

by BenV on Jan.01, 2015, under Check MK, Software

A while ago when faced ‘why is my disk slow’ I realized “hej, I have an SSD… let’s use it as cache!”.

Easier said than done, because these days you have tons of options. A quick glance at them shows BCache, DM-Cache, FaceBook’s Flash-Cache or what I went for which is based on Flash-Cache: EnhanceIO. There’s probably more of them, while writing this I ran into this article on LVM cache – sounds interesting too.

Here’s a little comparison between a few of the above options: different ssd to hdd cacheing options on askubuntu.com.

My reason for going with EnhanceIO is that unlike BCache (needs a specially formatted disk in B-cache format) you can apply EnhanceIO to any block device. DM-Cache was too difficult to use at first glance and Flash-Cache sounded like the precursor to EnhanceIO so why pick the former 🙂

So far I’m happy with my choice.

Setting up EnhanceIO does require patching your kernel however (unless your distro supplies a patched kernel, for instance in Arch you can simply install the enhanceio-dkms-git package from AUR). Easy enough for me, simply check out the git tree, copy the Driver/enhanceio directory to linux/drivers/block and patch the KConfig and Makefile with the supplied config.patch (although I did it manually). Compile, reboot and it’s time to test.

After rebooting this kernel with EnhanceIO you can use the supplied eio_cli to create, modifiy and view the caches. For testing I created a cache using a 20GB LVM SSD partition as backing device to speed up my entire ‘slow’ LVM volume group, which is backed by a software raid volume with name LVM:

root@machine# lvcreate -L 20G -n EnhanceIO-LVM FastStorage

root@machine# eio_cli create -d /dev/md/LVM -s /dev/FastStorage/EnhanceIO-LVM -c 'LVMCache' -m wb -p lru

# Creates cache for /dev/md/LVM using the SSD partition /dev/FastSTorage/EnhanceIO-LVM, write-back mode using lru strategy.

That’s all there’s to it! It will even write a udev rule so it automatically gets setup when your system reboots.

Immediately you can ‘feel’ writes being faster. Note that there are 3 modes to run the cache:

- read-only: this mode only benefits from reads being cached on SSD while writes go the traditional route. (advantage: safest, at no point your data is at risk)

- write-through: in this mode writes are also cached on SSD but still go immediately to your spinning disk (advantage: as safe as read-only but now your writes are also cached)

- write-back: this mode has the great advantage of not having to wait for writes to be committed to rotating disk, so for write-heavy machines this offers a great speedup. Writes first go to SSD and later get written back to spinning disk, and since SSDs don’t just lose data when powered off this -should- be safe. Note however that EnhanceIO says DO NOT USE THIS ON YOUR ROOT DEVICE because issues with mount order and fsck on the read-only volume during boot can mess up your data! However, I’ve been using this mode on 3 machines for about half a year now with no issues whatsoever.

Next to that you can select the replacement policy for when the cache gets full, either ‘lru‘ for least recently used, or ‘random‘ for what it says (they claim it uses more memory though), or ‘fifo‘ for first in first out (uses least memory). Personally I’ve been using ‘lru’ on all caches so far.

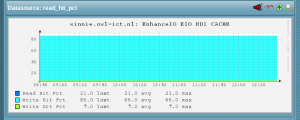

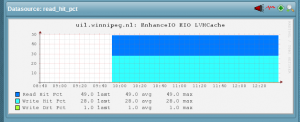

Now of course you’re curious as to the performance of these caches. As usual this will depend a lot on whatever you run on your machines. On machines I use as a webserver/mailserver/database server I see mostly benefit for the write-back mode, about 85% write cache hit but only a 20% read cache hit. (this is a small cache of about 15GB for about 500GB of spinning disks in use).

On the other hand my home machine with 40GB cache for 4TB of LVM (music/video/homedirs) has a 50% read hit rate but only 28% write hit.

You can find the statistics in /proc/enhanceio/CACHENAME/stats.

Check MK and EnhanceIO

What’s better than keeping an eye on your cache than Check_MK? You tell me :p

Anyway, I wrote a little Check_MK package that will find your EnhanceIO caches and monitor their status. It’ll report all stats as performance data so it can be graphed in pnp4nagios.

Note that the current pnp4nagios template only shows the read/write/dirty write hit percentages, maybe I’ll make some extra graphs in an updated version of the plugin. (suggestions are welcome!)

You can download the plugin here:

[Download not found]

Some pretty graphs that show the Check_MK EnhanceIO plugin in action:

![]()

May 24th, 2015 on 21:17

Hi,

when you get around to it, can you please repackage your plugins?

The path for the agent plugin must be local/share/check_mk/agents/plugins not local/share/check_mk/agents.

The isc_dns one has the right path, but it’s check file is called isc_bind9 whereas it registers the check / inventory functions as ‘dns’. That mismatch breaks check_mk for anyone that installs the plugin once they inventorize it.

(also naming convention doesn’t allow ‘dns’ isc_bind9 or isc_dns was better, maybe isc_bind9_status)

May 24th, 2015 on 22:25

@darkfader: Erhm, while I made a bunch of plugins I didn’t make any with isc_dns, so maybe you’re asking the wrong person 🙂

July 10th, 2015 on 17:25

Can I ask how were you able to enable write-back on a root device. When I enable it on my rootFS and reboot, I completely lost the cache set after a reboot every time. Let know what you secret.

July 10th, 2015 on 17:39

@minhgi: May I remind you that EnhanceIO specifically says not to do that? :-p

See this for ‘why not’ – https://github.com/stec-inc/EnhanceIO/issues/30

Other than that it should just work, EnhanceIO should create udev rules to recreate the cache after a reboot. In case you’re running slackware you could edit /etc/rc.d/rc.S to bring up the cache before it does fsck.

July 20th, 2015 on 22:41

Sorry BenV,

I finally got the chance to edit rcs, but could not get it to work. Can you post your /etc/rc.d/rc.S.

By the way, I am using ubuntu